Python AsyncIO & Concurrency Explained Event Loops, Tasks & High-Performance Architecture

By Vishal Shah August 25, 2025

Why AsyncIO Is Critical for Modern Python Systems

- High-throughput I/O workloads

- Real-time messaging & streaming

- Web scraping & integrations

- Scalable microservices

- AI/ML workflow orchestration

- Event-driven APIs

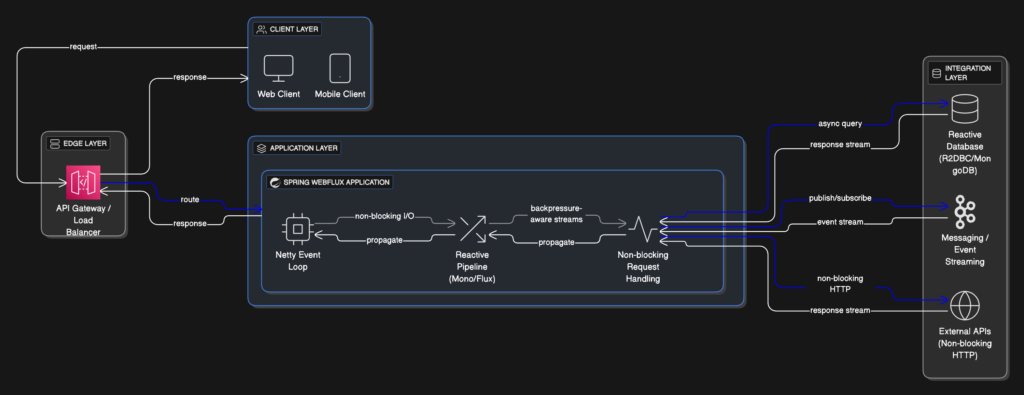

Traditional threading introduces significant overhead. AsyncIO solves this by using non-blocking I/O and a single event loop that executes thousands of tasks cooperatively.

AsyncIO Fundamentals Explained

async def fetch_data():

return "Hello Async"

result = await fetch_data()

task = asyncio.create_task(fetch_data())

AsyncIO is driven by an event loop that schedules coroutines cooperatively rather than preemptively like threads.

Basic AsyncIO Example

import asyncio

async def say_hello():

print("Hello")

await asyncio.sleep(1)

print("World!")

asyncio.run(say_hello())

Running Coroutines Concurrently

async def get_user():

await asyncio.sleep(2)

return "User"

async def get_orders():

await asyncio.sleep(3)

return "Orders"

async def main():

results = await asyncio.gather(get_user(), get_orders())

print(results)

asyncio.run(main())

Tasks execute concurrently — total runtime equals the slowest task, not the sum of all tasks.

When AsyncIO Is the Wrong Choice

Feature: Login

Scenario: Valid login

Given user is on the login page

When user enters valid credentials

Then user should see the dashboard

- CPU-heavy workloads

- Image/video processing

- ML inference

- Encryption / hashing

For CPU-bound workloads, use multiprocessing, job queues, Ray, Dask, or Celery.

Async APIs with FastAPI

@app.get("/users")

async def get_users():

users = await fetch_from_db()

return users

This approach enables extremely high request throughput with minimal infrastructure cost, commonly implemented by experienced Backend Engineering teams.

Async Databases & Drivers

- asyncpg (PostgreSQL)

- motor (MongoDB)

- aiomysql

conn = await asyncpg.connect(database="db")

rows = await conn.fetch("SELECT * FROM customers")

AsyncIO + Threads + Processes

loop.run_in_executor(None, cpu_intensive_task)

| Workload Type | Best Model |

|---|---|

| I/O-bound | AsyncIO |

| CPU-bound | Multiprocessing |

| Mixed | AsyncIO + Executors |

Async vs Sync Performance Benchmarks

| Tasks | Sync | Async |

|---|---|---|

| 100 I/O ops | 4.8s | 0.19s |

| 500 I/O ops | 22s | 0.83s |

| 1,000 I/O ops | 46s | 1.68s |

AsyncIO delivers 25×–40× performance improvement for I/O-heavy workloads.

Enterprise Use Cases for AsyncIO

- FinTech real-time trades

- Logistics live GPS tracking

- Healthcare async integrations

- Marketplaces notifications

- SaaS webhook processing

Similar high-volume, asynchronous data flows are demonstrated in the Scalable API Data Aggregation platform case study.

- uses: actions/setup-python@v3

- uses: microsoft/playwright-github-action@v1

- run: pytest

Recommended Async Project Structure

src/

app.py

services/

clients/

tasks/

AsyncIO Best Practices

- Use

asyncio.gather() - Avoid blocking calls

- Use async-native ORMs

- Background tasks via

create_task() - Monitor with Prometheus & Grafana

For event-driven streaming and async messaging patterns, AsyncIO is often combined with Kafka as explained in our Python Kafka Integration Guide.