MakeMyDocs

Education Website

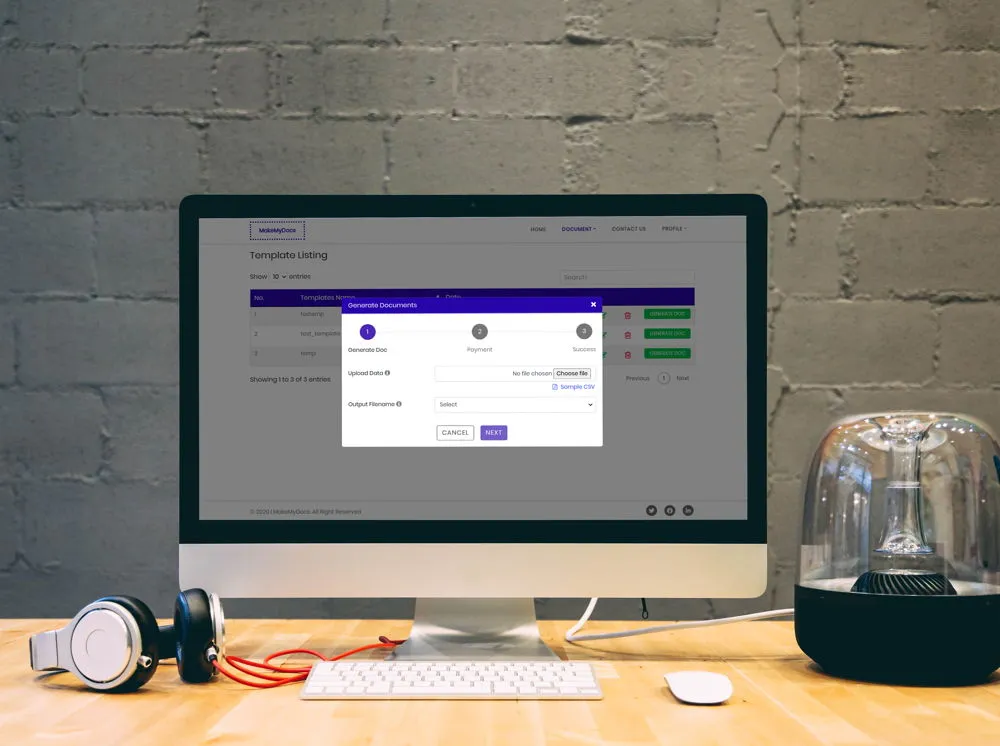

MakeMyDocs is an educational website platform where the primary goal is to create a custom document based on user requirements with different payment modes. The platform provides users a simple UI interface where users can simply drag and drop templates to create documents quickly and easily. You don’t have to be an IT guru to use this tool–no technical knowledge or special required.

The application provides to easily create thousands of secure documents within minutes via CSV document with payment modes for users or organizations. The application platform provides other features like – Add/Modify/delete the uploaded templates, custom templates to generate multi/bulk documents with the same template. No minimums plans/No hidden fees, You pay for what you create and then download and use that document over and over again, etc. The application provides a client-server system with a robust and optimized backend with AWS lambda.